Detour: Dynamic Programming

- Dynamic Programming

- A general algorithm design technique for solving problems defined by or formulated as recurrences with overlapping sub-instances

- In this context, Programming == Planning

- Main storyline

- Setting up a recurrence

- Relating a solution of a larger instance to solutions of some smaller instances

- Solve small instances once

- Record solutions in a table

- Extract a solution of a larger instance from the table

Forward Probability Calculation

- Need to know $\alpha_t^k$

- Time X States

- When we know $\alpha_t^k$ with X, then we know the value of $P(X)$

- Answering the evalutation question without Z

- ForwardAlgorithm

- Initialize

- $\alpha_t^k = b_{k, x_t} \sum_i \alpha_{t-1}^ia_{i,k}$

- Return $\sum_i \alpha_T^i$

- Initialize

- Proof of correctness

- $\sum_i \alpha_T^i = \sum_i P(x_1, \cdots , x_T, Z_T^i =1)=P(x_1, \cdots ,x_t)$

- Where to use the memorization table?

- $\alpha_t^k$

- Limitation of the forward probability

- Only takes the input sequence of X befor time t

- $P(x_1, \cdots , x_t, Z_t^k = 1) = \alpha_t^K and t \neq T$

- Need to see a probability distribution of a latent variable at time t given the whole X

- Recall the bayes ball algorithm

Backward Probability Calculation

- 이를 계산하면 dynamic programming을 활용 가능함

- Forward 와 Backword를 활용하면 Decoding 문제를 해결가능함

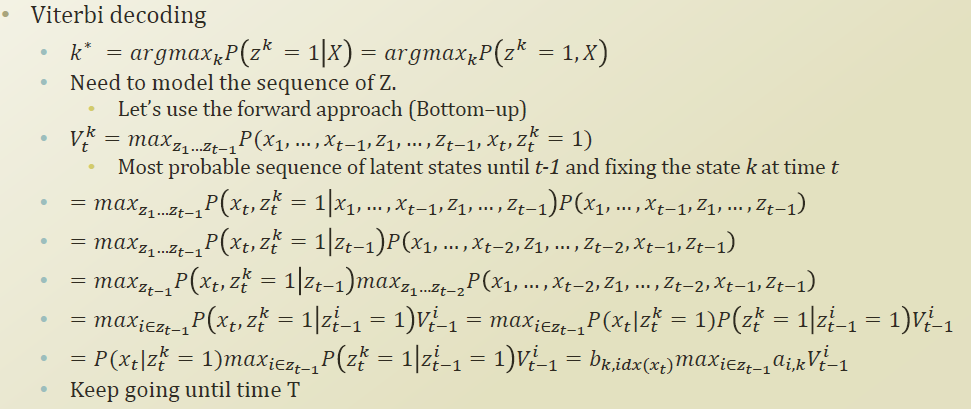

Viterbi Decoding

- Need to know $V_t^k$

- Time X States

- Store $V_t^k$ Two variables to store the trace and the probability up to time $t$: Two memorization tables

- Answering the decoding question with $\pi, a, b, X$

- ViterbiDecodingAlgorithm

- Initialize

- $V_1^k = b_{k, x_1}, \pi _k$

- Iterate until time T

- $V_t^k = b_{k, idx(x_t)} max_{i \in Z_{t-1}} a_{i, k}V_{t-1}^i$

- $trace_t^k = argmax_i\in Z_{t-1} a_{i,k}V_{t-1}^i$

- Return $P(X, Z^*) = max_kV_T^k, Z_T^* = argmax_kV_T^k,Z_{t-1}^* = trace_t^{Z_t^*}$

- Technical difficulites in the implemtation

- Very frequent underflow problems

- Turn this into the log domain -> from multiplication to summation

- (지속적으로 확률의 곱셈이 이어지므로 너무 많은 소수점들의 곱셈 연산이 이어짐 -> log변환 후 연산)

'기계학습 > 인공지능및기계학습개론정리' 카테고리의 다른 글

| Sampling Based Inference (Markov Chain) -작성 중 (0) | 2020.12.23 |

|---|---|

| Sampling Based Inference(Forward/Rejection/Importance Sampling) (0) | 2020.11.26 |

| Hidden Markov Model(1: Joint, Marginal Probability of HMM) (0) | 2020.11.16 |

| Bayesian Network (0) | 2020.11.03 |

| Naive Bayes Classifier + Logistic Regression (parameter approximation) (0) | 2020.08.27 |